At my lab's group meeting this week, one of my colleagues was showing off the tungsten cube he had purchased to be used in a satellite designed to measure the Earth's mass distribution, similar to the GRACE mission I discussed earlier.

|

| (May contain Infinity Stone) |

Since we want the cube to be only affected by gravity, one of the steps in fabrication is degaussing, or removing any residual magnetism. I was curious about this process, since it didn't align with my previous context for degaussing: In high school, I worked for the IT department during the summers, and I was once assigned the task of erasing a collection of video tapes that had been used for a media class. This was done using a degausser, which was essentially an electromagnet that I ran over the surface of the tapes. However, this would put all the magnetic fields pointing in the same direction, not zero them.

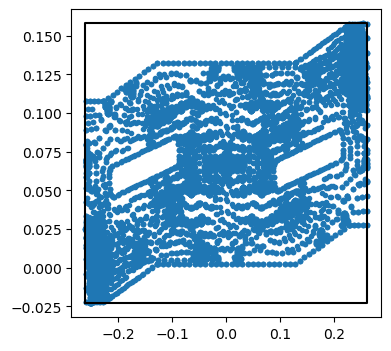

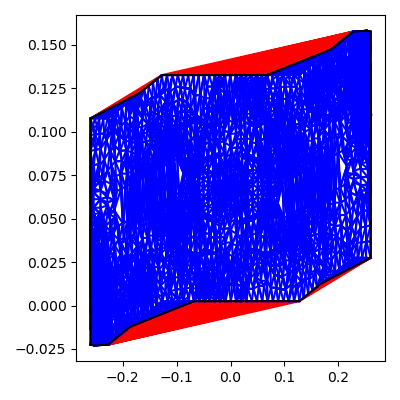

One technique I found for driving the field to zero is to apply a large external field, then repeatedly reorient it while decreasing the magnitude. I decided to try this in 2D, similar to the Ising model, but more classical: The magnetic spins can point any direction in the plane, and experience a torque from the surrounding spins and external field. The animation below shows each spin's direction in black, the average direction in red, and the total magnitude of the field as time progresses in the lower plot. The external field is shown on the outer edges.

The way I applied the external fields I think results in the diagonal bias you can see near the end, but overall I'm impressed I was able to reduce the field to 25% of the original value – Not nearly enough for the sensitivity we need though!