Now that we're in Florida, and no longer sheltering from COVID, I've actually been working on campus. My department is in the (slow) process of moving to a new building, so I've been temporarily put in one of the large offices shared by grad students, and I've noticed an interesting quirk of my chair: After I get up, now and then it will sink to its lowest height, so I need to raise it again when I sit down. I was curious what was going on, and decided to look into how the lift mechanism works (or doesn't).

Most office chairs use a pneumatic cylinder to set and maintain a given height. They consist of a tube that can let air in or out when you open a valve, a piston connected to the chair, and a spring linking the tube to the piston:

We've defined a few variables here: L is the uncompressed length of the spring, i.e. the maximum height of the seat, hset is the height the chair is set to when the valve is closed, and h is the rest height. Using these, we can write the 3 forces acting on the seat:

where patm is the atmospheric pressure, A is the cross-section of the cylinder, k is the spring constant, m is the mass of the person on the chair, and g is the acceleration from gravity. If we set F = 0, we can get the rest state of the chair, and try a few values for the various quantities:

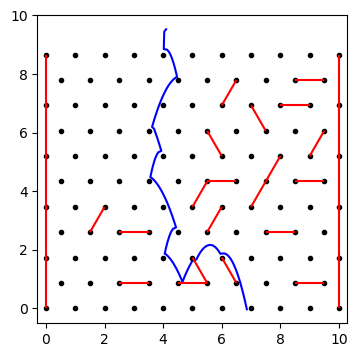

Here we've solved the above equation for h, and then plugged in a bunch of values for m and hset. I chose L to be 15 cm, A to be for a diameter of 5 cm, and set k so that 40 kg can compress the spring by L. The black lines show some constant values for the rest height, which gradually change slope depending on the set height. I decided to replot these points to better show the relationship between the set height and the rest height:

The dashed line shows points where the chair does not move from its set height – I was surprised to see so many points above the line, meaning the chair rises from its set point, but I'm thinking that's due to the spring strength I used, which may be way off.

As interesting as this was to work though, it doesn't bring me any closer to explaining why my chair drops while I'm not sitting on it, rather than rises. Time to write a grant proposal for "Pneumatic Posterior Support System Instabilities: Sources & Solutions"!