A number of years ago, Marika and I got a Peloton bike, and I've often wondered whether the data from logged rides is available. This week I did some digging, and I found a couple people exploring the same question. It turns out Peloton offers the same type of REST API that I learned about when I was exploring the PUC data! Unfortunately, it's largely undocumented, but I was able to get what I was interested in by following those links above. The bike records several different statistics: the speed I'm pedaling measured in revolutions per minute (rpm), the resistance applied to the pedals measured as a percent, the output power resulting from those two factors measured in Watts, and my heart rate measured by my watch in beats per minute (bpm).

Because I'm a physicist, I was both delighted by the use of SI units for the power (Watts) and total energy (Joules), and disappointed that resistance is simply given as a percentage. I know that the output power depends on both the cadence and resistance, so we can plot those 3 together and see what the relation is:

It's a little hard to see, but if you look at points with similar resistance (color), they show a roughly linear relation between the cadence and output. As resistance increases, so does the slope. Unfortunately, the data Peloton gives is rounded to integers, so it's hard to get a precise measure of the relation.

The classes we take on the bike give target ranges for cadence and resistance over the course of a ride. The bike shows where you are relative to the min and max, and I've noticed that sometimes I can stay roughly in the center, and other times I'm ping-ponging from end to end. My impression was that it was higher resistances that made it more difficult to stay stable, so to back that up I split the rides into regions based on changes in the target resistance/cadence, and plotted the standard deviation of my cadence:

This doesn't show a relation as cut and dry as I expected, but we can see that the highest deviations all are during resistances higher than 30%, which is where I start to feel strained.

The final thing I wanted to look at was long term trends – Can I see improvement in my performance? I took some summary statistics for each ride, and plotted them with the number of days since we got it. First I looked at the distribution of output power over each ride:

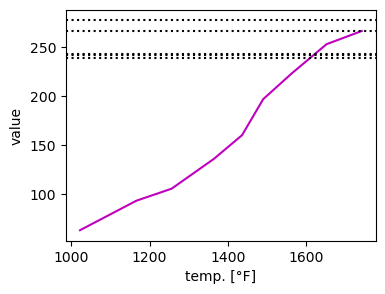

The dots show the median power, and the lines show the 25th/75th percentiles. At the very beginning, I was a bit overambitious, and tried a class that was way above my abilities, then settled into a more consistent level. After a significant break (our time living in the RV, which barely has space for us and Eros, let alone a bike), I've been on a nice upward trend. The other improvement I wanted to look for was a trend in heart rates – Ideally, I should be able to achieve the same output power with a lower heart rate.

Since it takes time for my heart rate to respond to changes in effort, I decided a better measure was the max rate with the total work done over the course of each ride. You can see above, I do manage to hit higher work totals for the same heart rate, and for the lower work totals, my heart rate is lower – Progress!

If you have your own Peloton and want to see your stats (or maybe develop a Peloton analysis package?) you can find my code here.